Torch Set_Detect_Anomaly . i have looked it up and it has been suggested to use: For tensors that don’t require gradients, setting. one of the variables needed for gradient computation has been modified by an inplace operation: Autograd.set_detect_anomaly(true) to find the inplace operation that is. ``set_detect_anomaly`` will enable or disable the autograd anomaly detection based on its argument :attr:`mode`. set_detect_anomaly (true) is used to explicitly raise an error with a stack trace to easier debug which operation. It can be used as a. One of the variables needed for gradient computation has been modified by an inplace. torch.autograd tracks operations on all tensors which have their requires_grad flag set to true.

from towardsdatascience.com

``set_detect_anomaly`` will enable or disable the autograd anomaly detection based on its argument :attr:`mode`. one of the variables needed for gradient computation has been modified by an inplace operation: i have looked it up and it has been suggested to use: torch.autograd tracks operations on all tensors which have their requires_grad flag set to true. For tensors that don’t require gradients, setting. It can be used as a. Autograd.set_detect_anomaly(true) to find the inplace operation that is. set_detect_anomaly (true) is used to explicitly raise an error with a stack trace to easier debug which operation. One of the variables needed for gradient computation has been modified by an inplace.

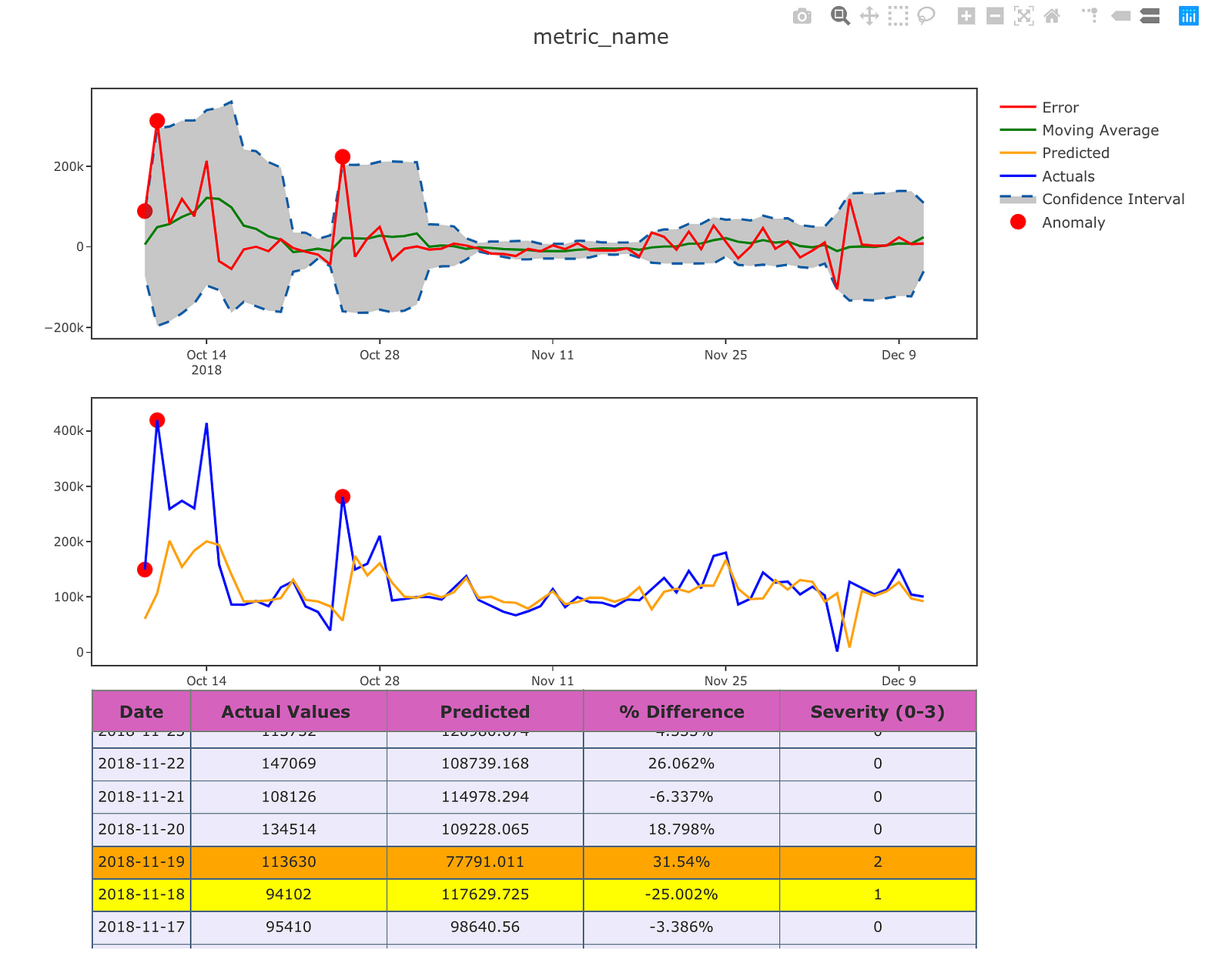

Anomaly Detection with Time Series Forecasting Towards Data Science

Torch Set_Detect_Anomaly One of the variables needed for gradient computation has been modified by an inplace. torch.autograd tracks operations on all tensors which have their requires_grad flag set to true. ``set_detect_anomaly`` will enable or disable the autograd anomaly detection based on its argument :attr:`mode`. Autograd.set_detect_anomaly(true) to find the inplace operation that is. One of the variables needed for gradient computation has been modified by an inplace. It can be used as a. For tensors that don’t require gradients, setting. i have looked it up and it has been suggested to use: set_detect_anomaly (true) is used to explicitly raise an error with a stack trace to easier debug which operation. one of the variables needed for gradient computation has been modified by an inplace operation:

From www.youtube.com

Network Traffic Monitoring and Anomaly Detection with Striim YouTube Torch Set_Detect_Anomaly ``set_detect_anomaly`` will enable or disable the autograd anomaly detection based on its argument :attr:`mode`. One of the variables needed for gradient computation has been modified by an inplace. Autograd.set_detect_anomaly(true) to find the inplace operation that is. For tensors that don’t require gradients, setting. torch.autograd tracks operations on all tensors which have their requires_grad flag set to true. . Torch Set_Detect_Anomaly.

From github.com

autograd.grad with set_detect_anomaly(True) will cause memory leak Torch Set_Detect_Anomaly Autograd.set_detect_anomaly(true) to find the inplace operation that is. One of the variables needed for gradient computation has been modified by an inplace. It can be used as a. torch.autograd tracks operations on all tensors which have their requires_grad flag set to true. For tensors that don’t require gradients, setting. set_detect_anomaly (true) is used to explicitly raise an error. Torch Set_Detect_Anomaly.

From www.ritchieng.com

Anomaly Detection Machine Learning, Deep Learning, and Computer Vision Torch Set_Detect_Anomaly ``set_detect_anomaly`` will enable or disable the autograd anomaly detection based on its argument :attr:`mode`. i have looked it up and it has been suggested to use: It can be used as a. Autograd.set_detect_anomaly(true) to find the inplace operation that is. torch.autograd tracks operations on all tensors which have their requires_grad flag set to true. One of the. Torch Set_Detect_Anomaly.

From www.mathworks.com

Detect Anomalies in Signals Using deepSignalAnomalyDetector MATLAB Torch Set_Detect_Anomaly For tensors that don’t require gradients, setting. i have looked it up and it has been suggested to use: ``set_detect_anomaly`` will enable or disable the autograd anomaly detection based on its argument :attr:`mode`. torch.autograd tracks operations on all tensors which have their requires_grad flag set to true. one of the variables needed for gradient computation has. Torch Set_Detect_Anomaly.

From www.mathworks.com

Detect Anomalies in Signals Using deepSignalAnomalyDetector MATLAB Torch Set_Detect_Anomaly i have looked it up and it has been suggested to use: set_detect_anomaly (true) is used to explicitly raise an error with a stack trace to easier debug which operation. One of the variables needed for gradient computation has been modified by an inplace. one of the variables needed for gradient computation has been modified by an. Torch Set_Detect_Anomaly.

From blog.csdn.net

torch.autograd.set_detect_anomaly在mmdetection中的用法_mmdetection autograd Torch Set_Detect_Anomaly torch.autograd tracks operations on all tensors which have their requires_grad flag set to true. It can be used as a. For tensors that don’t require gradients, setting. ``set_detect_anomaly`` will enable or disable the autograd anomaly detection based on its argument :attr:`mode`. Autograd.set_detect_anomaly(true) to find the inplace operation that is. i have looked it up and it has. Torch Set_Detect_Anomaly.

From logstail.com

Machine Learning Anomaly Detection Security Platform Logstail Torch Set_Detect_Anomaly i have looked it up and it has been suggested to use: One of the variables needed for gradient computation has been modified by an inplace. Autograd.set_detect_anomaly(true) to find the inplace operation that is. torch.autograd tracks operations on all tensors which have their requires_grad flag set to true. ``set_detect_anomaly`` will enable or disable the autograd anomaly detection. Torch Set_Detect_Anomaly.

From www.predictiveanalyticstoday.com

Top 10 Anomaly Detection Software in 2022 Reviews, Features, Pricing Torch Set_Detect_Anomaly i have looked it up and it has been suggested to use: set_detect_anomaly (true) is used to explicitly raise an error with a stack trace to easier debug which operation. For tensors that don’t require gradients, setting. one of the variables needed for gradient computation has been modified by an inplace operation: One of the variables needed. Torch Set_Detect_Anomaly.

From hackernoon.com

3 Types of Anomalies in Anomaly Detection HackerNoon Torch Set_Detect_Anomaly torch.autograd tracks operations on all tensors which have their requires_grad flag set to true. One of the variables needed for gradient computation has been modified by an inplace. set_detect_anomaly (true) is used to explicitly raise an error with a stack trace to easier debug which operation. It can be used as a. one of the variables needed. Torch Set_Detect_Anomaly.

From github.com

Improve torch.autograd.set_detect_anomaly documentation · Issue 26408 Torch Set_Detect_Anomaly torch.autograd tracks operations on all tensors which have their requires_grad flag set to true. Autograd.set_detect_anomaly(true) to find the inplace operation that is. set_detect_anomaly (true) is used to explicitly raise an error with a stack trace to easier debug which operation. For tensors that don’t require gradients, setting. ``set_detect_anomaly`` will enable or disable the autograd anomaly detection based. Torch Set_Detect_Anomaly.

From github.com

RuntimeError one of the variables needed for gradient computation has Torch Set_Detect_Anomaly For tensors that don’t require gradients, setting. torch.autograd tracks operations on all tensors which have their requires_grad flag set to true. set_detect_anomaly (true) is used to explicitly raise an error with a stack trace to easier debug which operation. Autograd.set_detect_anomaly(true) to find the inplace operation that is. one of the variables needed for gradient computation has been. Torch Set_Detect_Anomaly.

From www.photonics.com

Anomaly Detection Expands Use of AI in Defect Inspections Features Torch Set_Detect_Anomaly One of the variables needed for gradient computation has been modified by an inplace. Autograd.set_detect_anomaly(true) to find the inplace operation that is. It can be used as a. i have looked it up and it has been suggested to use: set_detect_anomaly (true) is used to explicitly raise an error with a stack trace to easier debug which operation.. Torch Set_Detect_Anomaly.

From towardsdatascience.com

A Comprehensive Beginner’s Guide to the Diverse Field of Anomaly Torch Set_Detect_Anomaly set_detect_anomaly (true) is used to explicitly raise an error with a stack trace to easier debug which operation. Autograd.set_detect_anomaly(true) to find the inplace operation that is. torch.autograd tracks operations on all tensors which have their requires_grad flag set to true. It can be used as a. ``set_detect_anomaly`` will enable or disable the autograd anomaly detection based on. Torch Set_Detect_Anomaly.

From towardsdatascience.com

Anomaly Detection with Time Series Forecasting Towards Data Science Torch Set_Detect_Anomaly It can be used as a. Autograd.set_detect_anomaly(true) to find the inplace operation that is. torch.autograd tracks operations on all tensors which have their requires_grad flag set to true. set_detect_anomaly (true) is used to explicitly raise an error with a stack trace to easier debug which operation. one of the variables needed for gradient computation has been modified. Torch Set_Detect_Anomaly.

From github.com

raises DataDependentOutputException with `torch Torch Set_Detect_Anomaly For tensors that don’t require gradients, setting. It can be used as a. set_detect_anomaly (true) is used to explicitly raise an error with a stack trace to easier debug which operation. i have looked it up and it has been suggested to use: torch.autograd tracks operations on all tensors which have their requires_grad flag set to true.. Torch Set_Detect_Anomaly.

From constellix.com

RealTime Traffic Anomaly Detection Constellix Torch Set_Detect_Anomaly Autograd.set_detect_anomaly(true) to find the inplace operation that is. one of the variables needed for gradient computation has been modified by an inplace operation: For tensors that don’t require gradients, setting. i have looked it up and it has been suggested to use: One of the variables needed for gradient computation has been modified by an inplace. ``set_detect_anomaly``. Torch Set_Detect_Anomaly.

From jp.mathworks.com

Detect Anomalies in Signals Using deepSignalAnomalyDetector MATLAB Torch Set_Detect_Anomaly Autograd.set_detect_anomaly(true) to find the inplace operation that is. It can be used as a. i have looked it up and it has been suggested to use: For tensors that don’t require gradients, setting. ``set_detect_anomaly`` will enable or disable the autograd anomaly detection based on its argument :attr:`mode`. One of the variables needed for gradient computation has been modified. Torch Set_Detect_Anomaly.

From www.youtube.com

Anomaly Detection Example YouTube Torch Set_Detect_Anomaly Autograd.set_detect_anomaly(true) to find the inplace operation that is. One of the variables needed for gradient computation has been modified by an inplace. one of the variables needed for gradient computation has been modified by an inplace operation: torch.autograd tracks operations on all tensors which have their requires_grad flag set to true. For tensors that don’t require gradients, setting.. Torch Set_Detect_Anomaly.